Technology

Beyond the Chatbot - How Generative AI is Learning to Master the Physical World

For the last two years, we have watched Generative AI conquer the digital realm. It has written our emails, debugged our code, and generated art that wins competitions. But there has always been a glass screen separating AI from reality. GPT-4 can write a poem about a dishwasher, but it cannot load one.

That is changing. We are currently witnessing a pivotal shift in computer science: the transition of Generative AI from the world of bits to the world of atoms.

This isn't just about smarter robots. It is about applying the probabilistic power of Large Language Models (LLMs) and diffusion models to physics, chemistry, and biology. Here is what the deep research says about how GenAI is waking up to the real world.

1. The Rise of Generalist Robots (VLA Models)

In traditional robotics, if you wanted a robot to pick up an apple, you had to hard-code the physics of the grip, the recognition of the apple, and the trajectory of the arm. It was brittle.

The breakthrough came with Vision-Language-Action (VLA) models. Just as ChatGPT learned language by reading the internet, VLAs learn physics by watching videos of humans acting in the real world.

The Research - A prime example is Google DeepMind’s RT-2 (Robotic Transformer 2). Unlike previous robots, RT-2 uses a massive web-scale dataset. If you tell it to pick up the extinct animal, and there is a plastic dinosaur on the table, it succeeds.

Why this matters - The robot was never explicitly trained on dinosaurs. It used its semantic knowledge from the web (knowing what a dinosaur is) and translated that into a physical action. This is the ChatGPT moment for robotics reasoning and acting simultaneously.

2. Generative Discovery in Material Science

Perhaps the most high-impact application of Physical AI isn't visible to the naked eye. It’s happening at the atomic level. Designing new materials (for batteries, solar panels, or chips) usually takes decades of trial and error. Generative AI is turning this into an inverse design problem.

The Research - In late 2023, researchers published a paper in Nature detailing GNoME (Graph Networks for Materials Exploration). This AI didn't just tweak existing knowledge; it predicted the stability of over 2.2 million new crystal structures, including 380,000 that are currently stable enough to be synthesized.

The implication - We just compressed 800 years of material science research into a few months. This is generative in the truest sense it is hallucinating new physical structures that actually obey the laws of quantum mechanics.

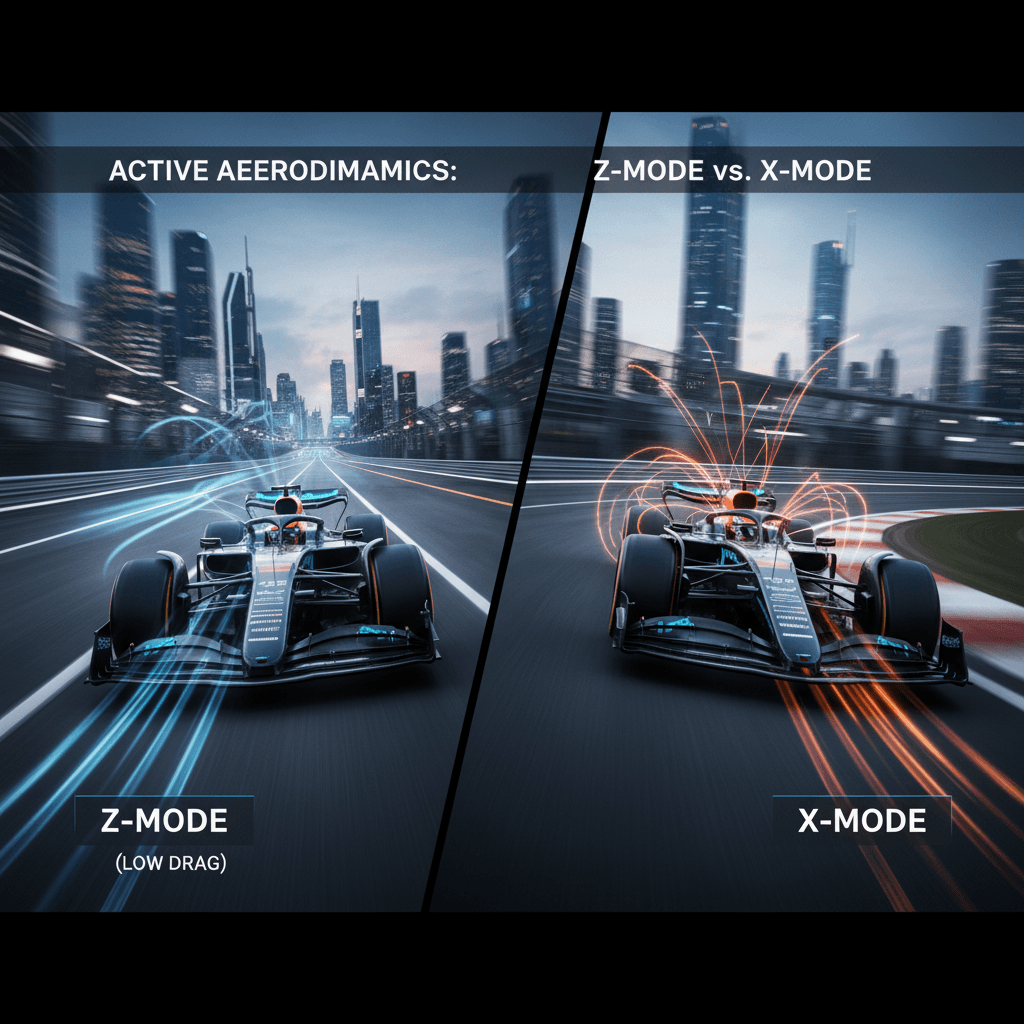

3. World Models and Simulating Physics

If you want to train an AI to drive a car or fly a drone, you cannot do it in the real world it’s too dangerous. You need a simulation. But coding a simulation that perfectly mirrors reality is incredibly hard.

Generative AI is now being used to generate these simulations. These are called World Models.

The Research - NVIDIA’s Eureka agent recently demonstrated this by teaching a robot hand to perform complex pen spinning tricks a feat of dexterity that is incredibly difficult to program manually. Eureka uses a Large Language Model (like GPT-4) to write its own reward functions (code), effectively thinking about how physics works and iterating on the code until the robot succeeds in the simulation. It then transfers that knowledge to the real robot (Sim-to-Real Transfer).

4. Predicting the Planet - AI Weather Models

Traditional weather forecasting relies on Numerical Weather Prediction (NWP) massive supercomputers crunching fluid dynamics equations. It is slow and expensive.

Generative AI treats weather not as a physics problem, but as a data problem.

The Research - GraphCast, another DeepMind breakthrough published in Science, outperforms the gold-standard weather simulation system (HRES) on 90% of test targets. It predicts cyclones, atmospheric rivers, and extreme temperatures faster and more accurately. It does this by understanding the patterns of weather in a way similar to how LLMs understand the patterns of language.

The Challenge - When AI Hallucinates in Reality When ChatGPT hallucinates, you get a wrong fact. When a Physical AI hallucinates, a robot might drop a chemical vial or a self-driving car might misinterpret a stop sign.

The current research frontier is focused on Uncertainty Quantification teaching the AI to know when it doesn't know. Researchers are developing Constitution-based AI systems that have hard-coded safety layers (physics guardrails) that the generative model cannot override.

Test Your Knowledge!

Click the button below to generate an AI-powered quiz based on this article.

Did you enjoy this article?

Show your appreciation by giving it a like!

Conversation (0)

Cite This Article

Generating...

.png&w=3840&q=75)